Yep. Shameless Title. Oh well.

I gotta feed the beast every once in awhile. Here, I lay out the story of Canary Waves, my development process and our path forward. Enjoy!

For more information, email jack@canary-waves.com.

Building the Idea

It was 2020. I got called into an incident investigation. It’s common to bring in third parties from other departments at operations to get a fresh set of eyes on an incident. In this particular case, the injured party was washing their truck and when they activated the pressure washer, the wand recoiled and hit them in the face. The employee had been wearing the proper PPE; hard hat, gloves and safety glasses, but the wand damaged the safety glasses, causing a cut on the eye.

In the investigation, multiple sources of data are brought in to help recognize what fell short or what could have been in place to prevent the injury: training records, camera recordings, pressure washer maintenance records, and more.

The piece of data that stuck with me however: the recorded two-way radio chatter before the incident, during the response, and afterwards.

The reason it struck me: why were we only now seeing and reviewing this data? Why did it take someone getting hurt to pull this out of the archives?

That was the seed.

A year later, the minerals manager at this site called me into a session to talk through a new frontline operational employee incentive program. At this time, the health and safety concept of “leading indicators” was gaining steam. Being asked about leading indicators of environmental performance in this context reminded me of the power of employee radio communication; the leading indicators are in that chatter…

Building the Product

Fast forward to 2022, and I was still thinking about this issue in the mining industry. How many injuries could have been prevented if we had listened to our most valuable asset, operational employees? So I started sketching out the basic logic flow for the program.

Mp3 file–>Automatic Speech Recognition (ASR)–>Machine Learning Contextual Analysis–> Safety Insights Reporting

Once the flow was in place, I needed to figure out how to bring it to life. I had completed a few Python trainings and projects, but this was going to be beyond my experience. I joined Y-Combinator’s startup matching program to see if I could find a software engineer that could help me push this forward.

And it started out great! I had a few sample recordings of mine site two-way radio traffic, and we were developing a program that would create some solid coverage and pdf textual outputs. But, over the 2 years, the meetings became less frequent, and we lost steam. I kept trying to make it work, knowing that consistency is the best momentum, but to no avail. Finally, I admitted defeat and decided I needed to take the plunge. Luckily, by this time, LLMs had begun establishing relevance, even dominance.

So I found a new temporary co-founder in Claude.

A background in Python provided the sense to place some object oriented principles into my prompts to keep things organized and focused. With the logic of the program established, the “vibe coding” sessions could begin.

First thing first, I needed to convert my .mp3 file to a text output.

We started with a function built on OpenAi’s automatic speech recognition model, Whisper. The input parameters required the audio file path string and the output csv string. Simple enough. The resulting outputs showed surprisingly decent results to start, but it was clunky.

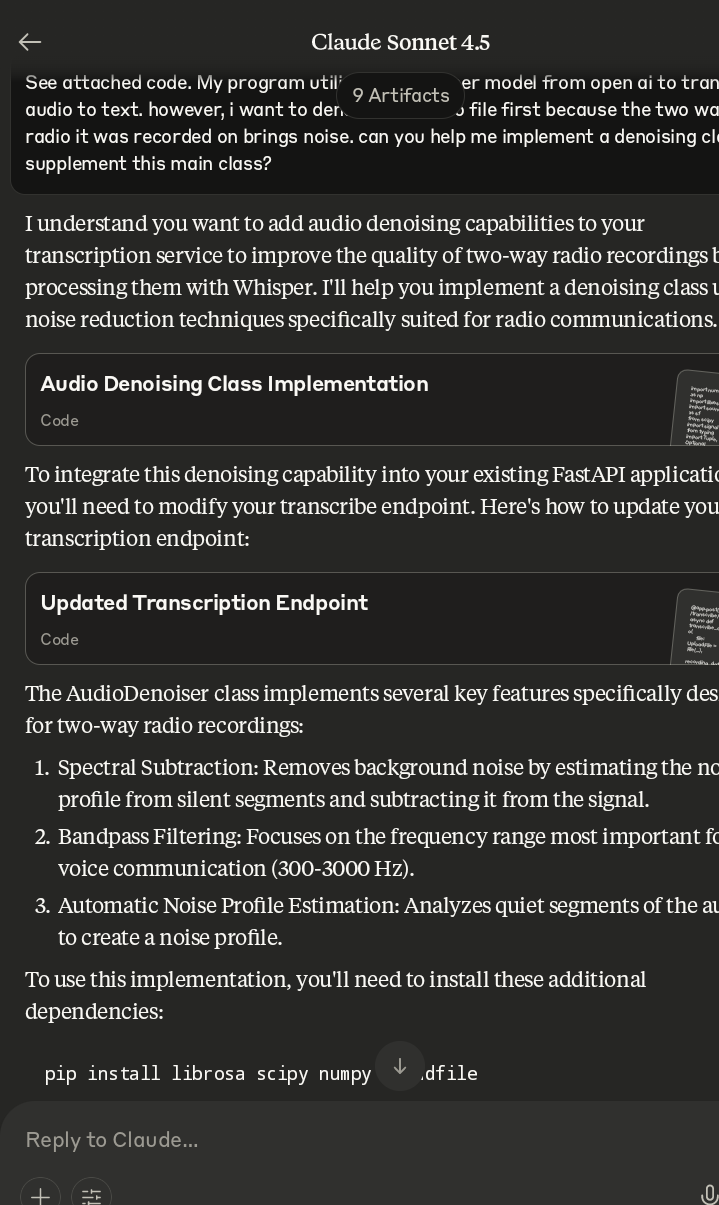

So, we added a FastAPI endpoint to make testing quick and easy. It hosted the file upload and the output download, provided error messages etc. A great way to keep things moving quickly!

I built out the code in PyCharm, adding the functions for loading the Whisper model, file uploads via FastAPI, conversion to .csv format, and final delivery to the FastAPI.

After a bit of troubleshooting on model loading and file path issues, everything was sorted.

Next was adding a few key features:

- Denoising and cleaning

- Metadata collection and validation,

- Accurate start and end times based on pauses in the audio file,

- Speaker identification, and

- A feedback field for model training.

Denoising the audio involved adding a new class that added spectral subtraction, bandpass filtering, and automatic noise profile estimation.

These features were brought into the class through Python’s Librosa library. The resulting model outputs were a marked improvement over the original!

Building the Startup

As these developments progressed, I began my market fit conversations again in earnest. And, amazingly, they bore fruit. After a few demonstrations and pitches to some folks in industry, a former colleague wanted to help me further develop my startup.

KB&G Consulting hosts an innovation studio designed to bring innovation to heavy industries, especially in the spaces of Health & Safety, Human Resource Management, and training.

Over the past 7 months, I’ve enjoyed building and reworking my prototype idea into a fully fledged startup alongside Julia Georgi. We’ve assembled an expert software engineering team, creative experts to redesign and rebrand, and are now stepping out to client and partner acquisition.

Canary Waves turns unstructured two-way radio chatter into structured safety and operations insight. Designed for high-risk, high-complexity worksites where real-time communication is essential — but rarely analyzed — Canary Waves uses speech recognition and machine learning to flag safety risks, operational inefficiencies, and leading indicators buried in daily radio traffic.

I had rebuilt my startup. Now, I actually have to help run it. We are currently looking for Proof of Concept partners to provide testing data for the model from site-based radio networks.

Email jack@canary-waves.com, reach out on LinkedIn, or check out our website for details.

Leave a comment